Adobe is integrating its Firefly intelligent generative technology (AI) directly to Photoshop that will enable the introduction of a brand innovative Generative Fill function that can expand images or eliminate objects. It also gives an AI accessibility to the Photoshop’s capabilities and granularity.

Firefly is the name that Adobe calls its”generative” AI -basically its response to the Midjourney. It was first announced in Marchand while it’s still not at the same level as its rivals are, it is getting there by claiming that it didn’t take artwork from artists to feed the algorithm in the same way as its competitors did.

This combination between Firefly with Photoshop is described in terms of “deep connection” in the use of generative AI and Photoshop’s tools of the core, which means it will result in more than just image creation.

The latest feature Adobe is primarily focusing on is Generative Fill which, on paper, sounds like the way DALL-E of OpenAI called outpainting. In a nutshell, the feature automatically is able to match perspective, lightning and design in the image, and allows it to add or eliminate content from the image without damaging it in just a few minutes using just simple text prompts.

Adobe states it Generative Fill allows editors to quickly re-think concepts because it can add or subtract from images in a non-destructive way.

“Create new content using layers that are generative, allowing users to quickly explore an array of possibilities for creativity and then reverse the effects whenever you’d like, without affecting the initial image” according to the firm. “Experiment with out of the box concepts, come up with new ideas and come up with endless possibilities of high-quality content as quick as you type.”

Adobe offered a few instances of what this technology is capable of:

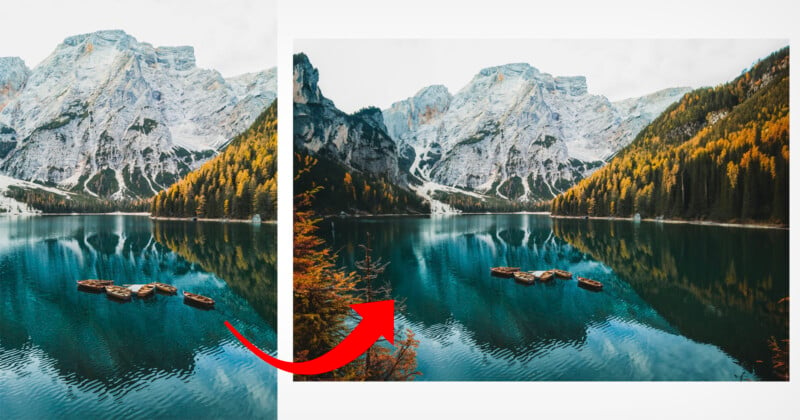

The way Generative Fill separates itself from OpenAI’s outpainting is by completely reimagining the background that Adobe suggests is feasible with its latest feature (with different results).

In a lot of the images it’s difficult to see the expanded regions of the picture on first glance. After a more thorough examination is a lack of clarity and sharpness show the difference in the image that was originally created and the one that was created using Generative Fill. However, there are a few examples available which look as if they were edited, so clearly Generative Fill’s AI isn’t a perfect fit in its early days.

Geneerative Fill is also available as a module within the Firefly beta that works with the browser, which allows users to try out Generative Fill’s new features online.

It’s not the only thing that’s coming to Photoshop which is driven by Firefly. Adobe also showed off the “Contextual Task Bar” that can intelligently select and hide a subject with the touch of a button. It also has an updated “Remove” instrument that enables editors to pick an object and then remove the object, as well as a collection of Adjustment Presets that automatically alter the image to match an aesthetic.

Adobe claims that all of these AI improvements are based on the same fundamentals that Firefly was based on.

“Adobe creates and implements all AI capabilities using a customer-centric approach, and in accordance with the AI Ethics principles to ensure transparency of data and content. Generative Fill supports Content Credentials and plays a vital function in ensuring that people are aware of whether content was made by a human or AI, or AI-edited” the company says.

“Content Credentials” are similar to ‘nutrition labels for digital content. They remain linked to content no matter where it is published, used or stored, allowing an accurate attribution, and helping consumers make informed choices regarding online content.”

Adobe Photoshop’s Generative Fill feature is being released as a desktop-based beta at present and will go live with general availability in the 2023’s second half.